Motivation

KGs store facts in their graph structure. KGs embedding represents KGs in a low-dimension vector space which preserves the inherent structure of KGs.

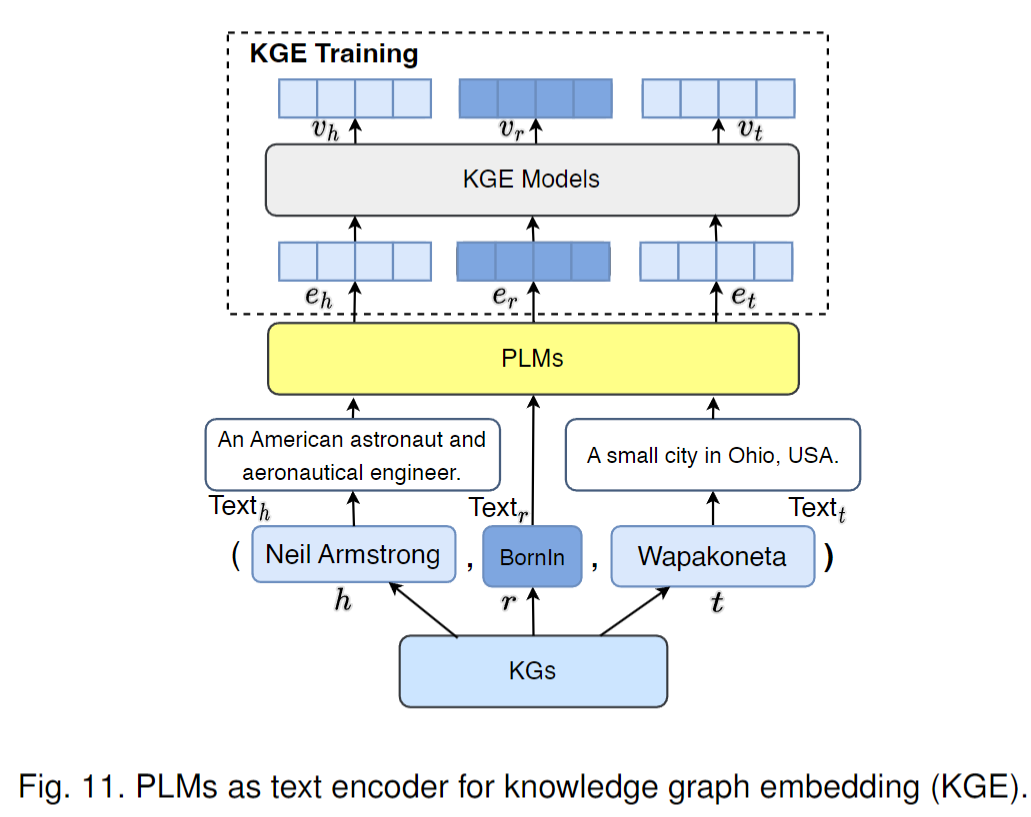

Recent studies apply PLMs to encode text information and generate representations for long-tail and emerging entities.

KG embeddings with PLMs are usually deployed as static, which is challenging to modify without re-training.

KG embeddings editing

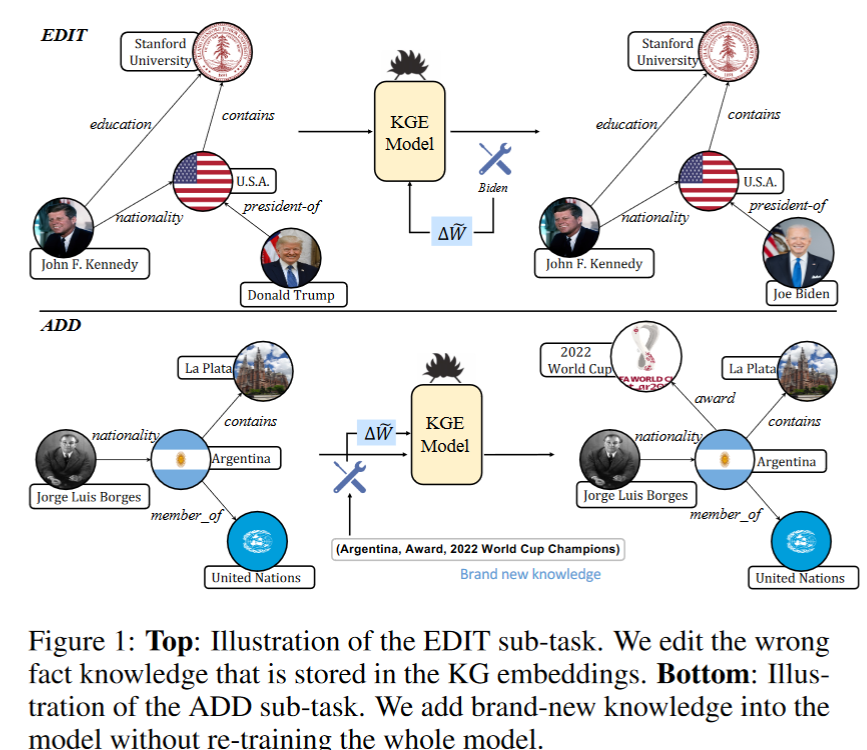

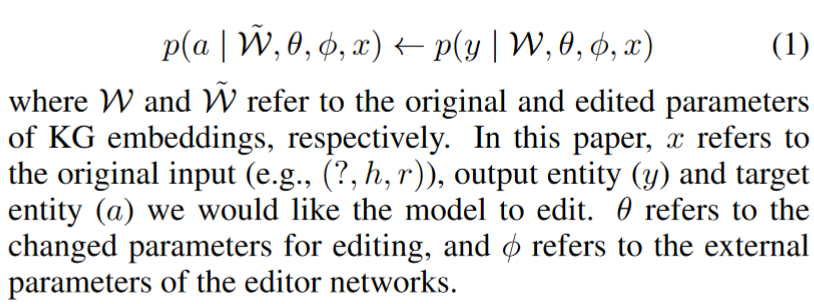

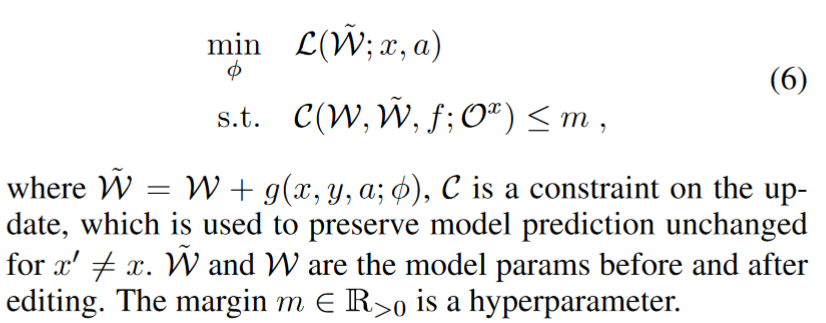

Editing language model-based KG embeddings, which aims to enable data-efficient and fast updates to KG embeddings for a small region of parametric space without influencing the performance of the rest.

KG embeddings editing= learning-to-update problems.

Contributions

- Propose a new task of editing language model-based KG embeddings and present twodatasets.

- Introduce the KGEditor that can efficiently modify incorrect knowledge or add new knowledge.

Methodology

Task Definition

KG: $G=(\mathcal{E},\mathcal{R},\mathcal{T})$

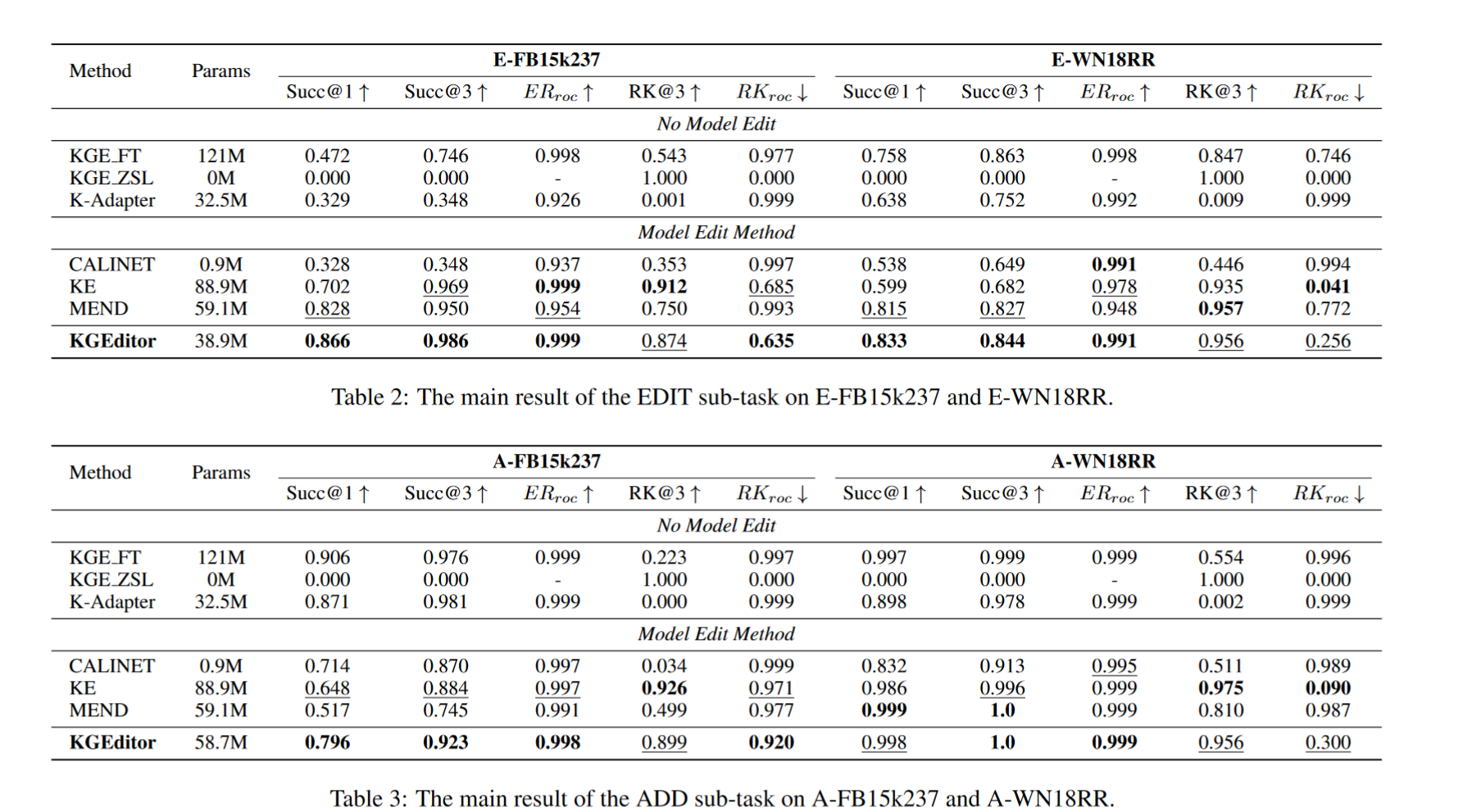

EDIT task: $(h,r,y,a)$ or $(y,r,h,a)$, $y$ denote the wrong or outdated entity, $a$ denote the target entity we want to replace.

Example:1

<Barack Obama, president of, U.S.A.> => <Barack Obama, president of, U.S.A., Joe Biden>

ADD task: Implement new knowledge into the model

Evaluation principles:

Knowledge reliability: edited or newly added knowledge should be correctly inferred=> Knowledge graph completion metrics: Success\@1

Knowledge locality: editing KG embeddings will not affect the rest of other facts=> Retain Knowledge (RK\@k).

The entities predicted by the original model are still correctly inferred by the edited model.

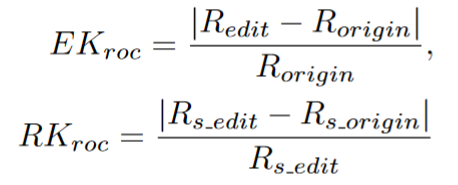

Edited Knowledge Rate of Change and Retaining Knowledge Rate of Change

Knowledge efficiency: modify a model with low training resources=>number of tuning parameters

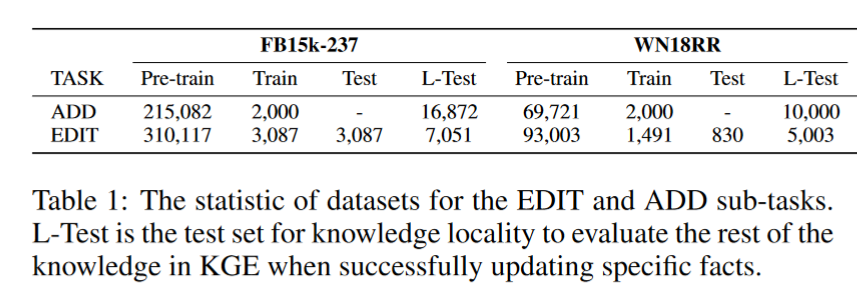

Datasets

Pre-process: Remove simple facts can be easily predicted by PLMs.

Edit task:

- Training: KGs generated by link prediction model (could contain error)

- Testing: original KGs

ADD task:

- Training: original KGs.

- Testing: inductive new facts.

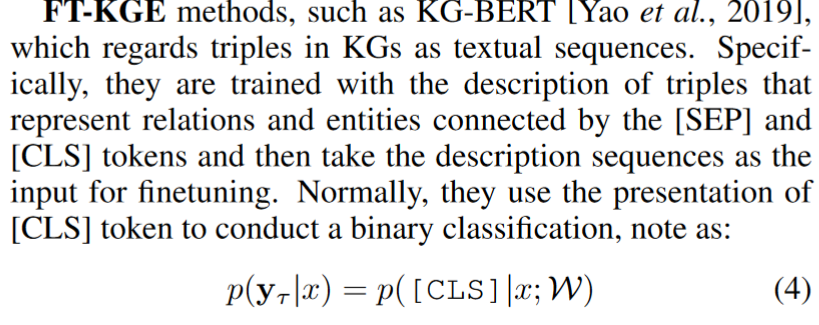

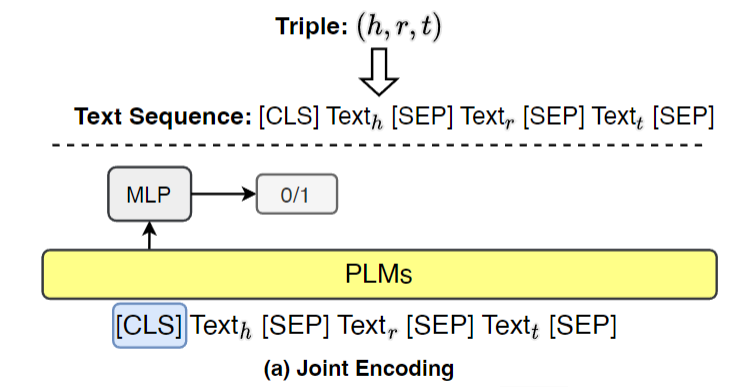

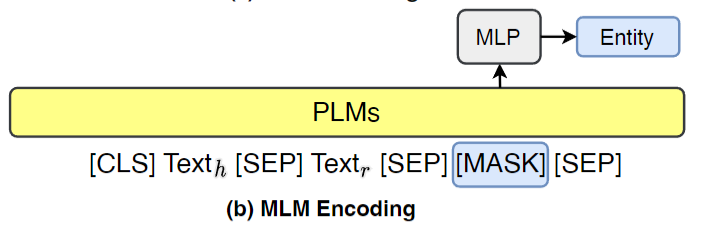

PLMs-based KGE

Finetuning KGE

Prompt tuning KGE

KGE Editing baselines

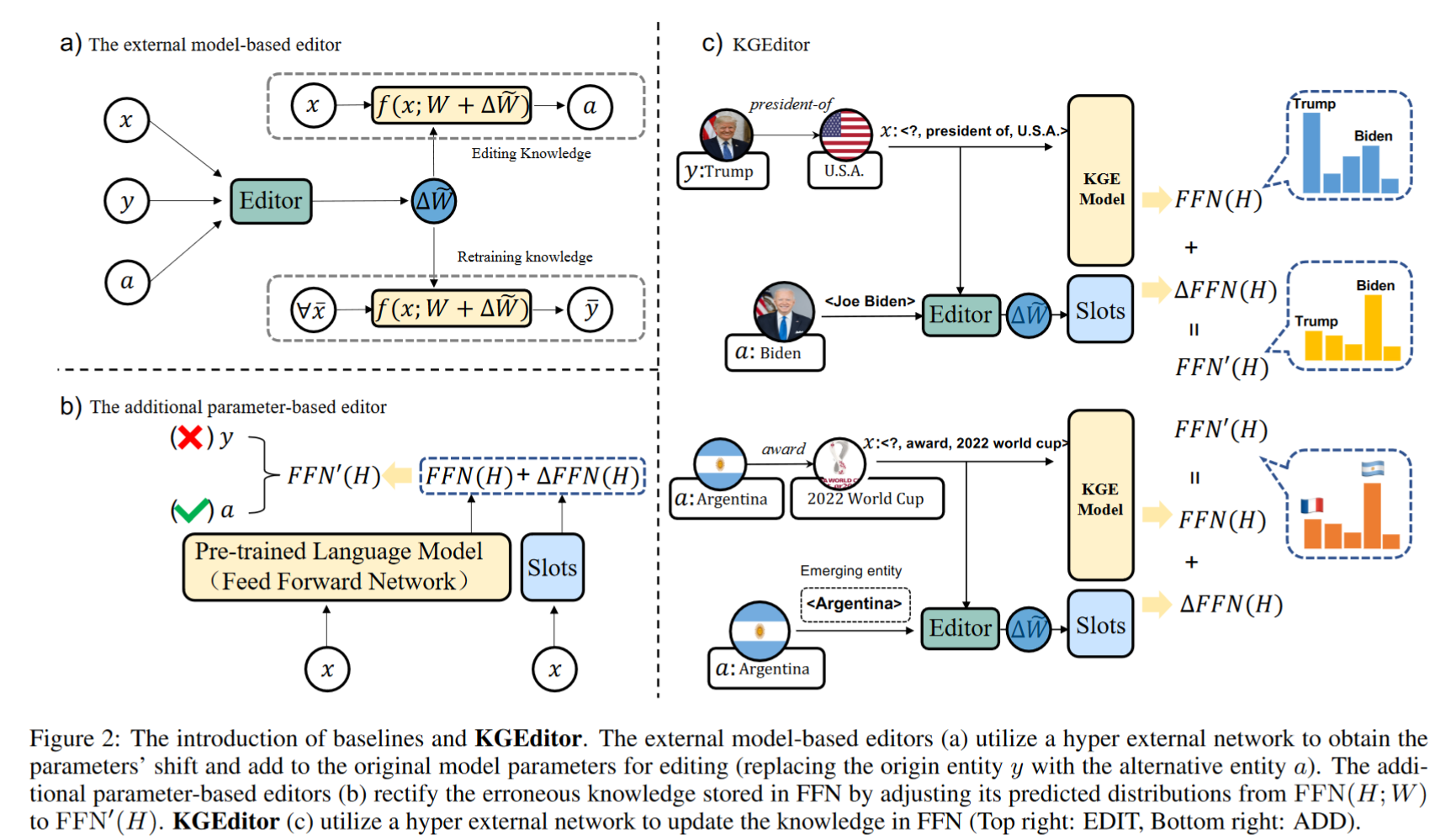

External model-based editor

Edit wrong knowledge in language models by utilizing a hyper network to predict the weight update during inference.

Cons: Need to fine tune a large number of parameters.

Cons: Need to fine tune a large number of parameters.

Additional parameter-based editor

Modify the output by another small model.

Cons:Poor in performance.

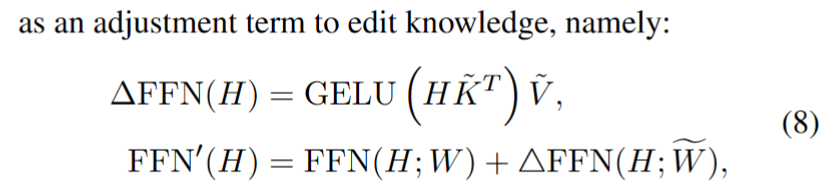

KGE editor

Results